VIDEO

Hema Chamraj (Director of Technology Advocacy at Intel AI) talks about responsible considerations to address limitations presented by AI in healthcare and drive better patient outcomes for all.

Like what you see here? Our mission-aligned Girl Geek X partners are hiring!

- See open jobs at Intel AI and check out open jobs at our trusted partner companies.

- Watch all Elevate 2021 conference video replays!

- Does your company want to sponsor a Girl Geek Dinner? Talk to us!

Transcript

Sukrutha Bhadouria: Hema is the Director of Technology Advocacy with AI for Good at Intel. She’s involved with Intel’s pandemic response technology initiative to collaborate with customers and partners to address COVID-19 challenges. This is a really important effort. So thank you, Hema. She has a passion for technology and for the role of AI in addressing issues in the healthcare and the life sciences sector. Welcome, Hema.

Hema Chamraj: Thank you. Thank you, Sukrutha. Super. Hey, I’m really excited to be here to talk about this topic, Responsible AI for Healthcare. Before I talk about responsible AI, I want to go first into health care and then I’ll talk about AI for healthcare and then I’ll get to responsible AI for healthcare. So as you know, all of us here as patients, as consumers of healthcare, this is something that we all understand very well.

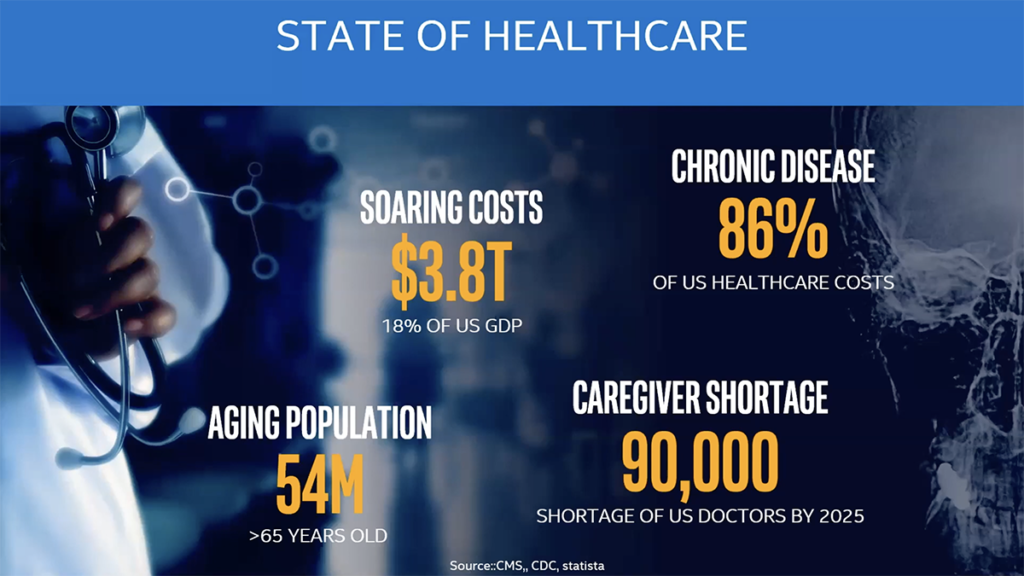

Hema Chamraj: Our health care system is really not in a great shape. As a nation, we spend the most, almost 20% of GDP on our healthcare system, but we have one of the largest sickness burdens. If you think about, at least nearly 50% of our population has at least one chronic condition. And also life expectancy also is lesser than Canada and Japan.

Hema Chamraj: So we are not doing that great. And as we age and as a population we’re aging, and so as we age it compounds the problem. And so what we ended up is with more sickness and more costs, and one of the things also that is not helping is the fact that we’ll have shortage of clinicians. So we’ll have less people to help us take care of ourselves.

Hema Chamraj: And so this is the state of healthcare we are in when it comes to cost, access, and quality. Really, it’s not looking good. And this is a form I’ve used multiple times over the years. I just have to change the numbers and it’s not trending in the right direction because in reality, we’re in trouble in terms of the healthcare system, and we need help from many, many, many directions, and we need innovations and tools.

Hema Chamraj: And I’m very, very enthusiastic about AI because of some of these. And here are some examples where I can try to explain why and how AI can help. When we think about healthcare, right? Just like every other sector we are collecting data and the data tsunami.

Hema Chamraj: Health is almost, 30% of the world’s data is healthcare. And it’s hard to think about, okay, how much, if we have all this data, how much, really, are we going to be using? It’s actually 1-2% of the data is really being put to work. So, and it’s humanly impossible really to be looking at this tsunami of data and make sense of it.

Hema Chamraj: And so in that sense, AI has made some real magic, especially in medical imaging and some of the areas where it has found all this hidden insights and hidden insights humanly not possible, actually. So that gets me all excited about, we need some tools to think of it. If we could really go after this 90+ percent of the data that is today not being put to work, if we could look at it, how much more problems we could be solving. And so that gets me excited.

Hema Chamraj: And also the other example here I want to call out is I talked about clinician shortage, right? This one is actually, as you look at developed nations, this problem is much more of a dire situation, because if you think about developing nations with a billion plus population and less than 80,000 radiologists to kind of serve this population, right?

Hema Chamraj: And those numbers actually could be, official numbers could be much lower than that in China. And think about that. This is the problem. And then if you have something, imagine that AI can look at all these cases and say, 70% of these cases actually doesn’t need to be looked at because they’re normal and then 30% should be actually be looked at by the physician because it needs attention, right? And so just imagine that, right?

Hema Chamraj: Now actually that is reality because we have worked with some, our customers and partners. Actually in China, it’s happening and not for everything, but just for the lung nodule detection is one of the problems that we were able to address. And there’s no reason to think we cannot address other conditions, also. And so that is one example. And the other one is about drug development.

Hema Chamraj: Drug development is really needs, badly needs to be some kind of disruption because you’re talking about billions of dollars and sometimes a decade for each drug and only 10% of these drugs actually make it to the final. So we have this really tough problem. And already we are seeing how we say with the recent vaccines that we are seeing, we’re already making progress and AI is playing a big role in it already.

Hema Chamraj: And if you think about Moderna, one thing I feel like people may not have noticed it, but Moderna actually were able to put out a booster vaccine for the latest COVID variants in less than 30 days. And that’s unheard of. And so that gets me really excited about what all AI can do. And of course, in AI, I mean, if you ask people about AI for COVID, depending on who you ask, people may say, well, it didn’t really fulfill the promise, but I am on the other camp. I say AI has actually played a role here because we are seeing first time with our own partners and customers.

Hema Chamraj: And I just put in a few examples here, looking from the left-hand side on the disinfecting robots to telehealth. And then I talked about medical imaging being one area where we have seen a lot of promise, especially when we had COVID testing shortage, actually a lot of the MRI and the X-ray, the tools were very helpful to detect COVID. And then you have on the bottom section, you have virtual ICUs. We had ICUs to complement to make sure that the ICU beds are going low. There were some solutions there.

Hema Chamraj: And then there are more around genomic sequencing of this virus strains, and then AI repurposing, drug repurposing. This is an example where drugs that were created for a different purpose, but repurposed – Dexamethasone, Remdesivir, the one that President Trump took when he was sick, that was also, it involved some level of AI for drug repurposing.

Hema Chamraj: So if you ask somebody like me, who’s a AI enthusiast, has AI been helpful? And is it a important tool? My answer is a hundred percent yes, right? And of course, people who have been at AI, they’ve understood that it has to be about a responsible AI. That’s not something that they take lightly, but what has happened for us in this year has been a spotlight, right?

Hema Chamraj: The COVID has put a spotlight on really the disproportionate burden and the inequities in healthcare, right? That’s come to light in a way that we are all forced to step back and take a look. If you think about the 4.5 times the hospitalization rate, or the two times the mortality rate of black versus white, it’s data that you cannot dispute, right? It’s something that we have to, and everybody’s sitting and thinking, the first question is who’s to blame, right? That’s where most of us aim to do, but the questions more than that is, where do we start? How do we fix? These are all questions that we’re all asking.

Hema Chamraj: So we don’t need to go to AI immediately because that’s one thing I would like to see is that not people running towards AI as somehow being the problem, we should start with the system that has been in place and the processes that have been in place for nearly a century now. And I want to talk about that as a starting point.

Hema Chamraj: Now, before I say anything, I want to say that our clinicians are the best. We need more of them. I mean, they are in this service to help us. So it’s less about the clinicians. It’s about the tools that clinicians need as they look at their patients to make decisions, that they need tools. And let me see, am I progressing? Yeah.

Hema Chamraj: So I just pulled out a few of these risk scores that have been in use for a long time. And these are used by clinicians to make decisions on diagnosis and referrals and so on. There’s the AHA, the American Heart Association, there’s a score and a study just as recent as 2019, there’s a study that showed that there’s the black and Latinx patients with heart failure go to emergency department in Boston. This is in 2019 and they’ve provided lower levels of care.

Hema Chamraj: And it’s somehow it was actually built into some of the scores from AHA. And then there is another score called VBAC, it’s a Vaginal Birth After Cesarean. And that score has been attributed to increased rates of cesarean sections for non-whites. And so I could go after each one of them, there’s EGFR for kidney failure. And so every disease category, there are so many scores that are embedded in the system that are being used on a daily basis, right? And so the question then is what are these scores? I mean, what’s the basis of these scores and it’s not all very clear. I mean, clearly they were done for a reason, but it’s not very clear.

Hema Chamraj: The assumptions are very invisible and race is often used as a proxy to explain the genetic differences and unconsciously, it has been propagating bias in the system. Now, one thing that I strongly and all of us, we believe that we look at the world based on our experiences. And so one example for all of us women here is that if you, I don’t know if you experienced this, but when women tend to go to the hospitals, and explain and talk about pain, that usually it’s chocked out as something psychological, usually.

Hema Chamraj: And that is not just something that happens once in a while. It happens routinely. And it is about something about a “brave men” and “emotional women” kind of a syndrome. So there are some things that are very embedded that propagates physician bias without them knowing it, right?

Hema Chamraj: And so this is the system there is. And then it happens more for people from the underrepresented and minorities, right? And so it requires all of us to look and understand what they’re experiencing. In order to even understand AI, we have to understand the experience. And so, there is the PBS documentary that I’ve started to look at, and this here is a story of one lady.

Hema Chamraj: She goes into for a gallbladder surgery, and then she is discharged. And then she complains of pain. And then she repeatedly and they repeatedly said, nothing is really at fault. And then when she collapses, she comes in and it is known that she has a septic infection. And that which is really a hospital acquired infection, which can be deadly. So, that’s just one example.

Hema Chamraj: And you really need to understand and look at, hear the experiences of people to really understand the level of fear. The level of distrust is in the system, right? And why does it matter? It matters because it translates to data that’s in the system. If you are so fearful that you don’t show up to the healthcare system, then there is the data is missing, that’s misrepresented, right?

Hema Chamraj: And so it’s an incomplete picture, but it has other things that are based on bias and that are based on beliefs. And the other, the additional thing we are seeing these days is this social determinants. These are things beyond just the clinical score, right? It talks about where do you live? How do you have access to healthcare?

Hema Chamraj: And a lot of these questions which are called social determinants is largely missing. I mean, we are doing, we are working towards it, but so what we end up having is this incomplete data that is then being used to develop algorithms as input, right?

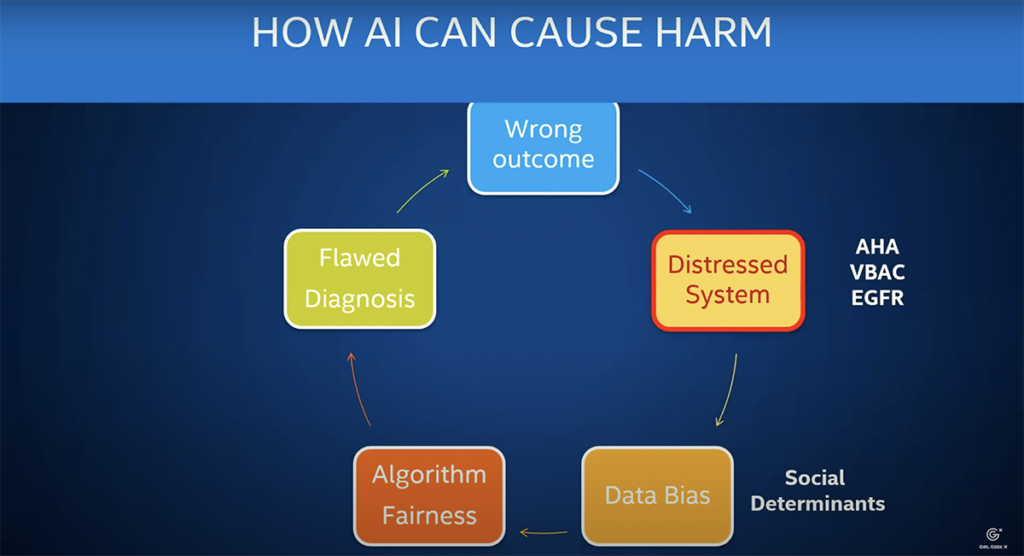

Hema Chamraj: Just imagine what happens with that. You have data that is incomplete. So it’s garbage in, garbage out. And so there is one study that in 2019, this was highly publicized study that everybody, people with good intentions, try to build this algorithm and said, “Let’s look at the data and say, who is more sick. And so we can find out who should get the highest level of care.”

Hema Chamraj: And it turns out that the white population were determined to be more sick. And obviously it didn’t add up and turned out that the algorithm was flawed in terms of the design, because it was looking at cost as a proxy to say who are more sick. So it’s a flawed design looking at incomplete data from a distressed system. And obviously it leads only to a flawed diagnosis and hence wrong outcomes.

Hema Chamraj: So AI is not the where the problem starts, but it does do a few things. It reinforces or amplifies the bias and the power imbalances, right? And the people who develop the algorithms. I mean, it’s hard to imagine that they understood the experience of the people for whom this will be used against, right? I mean used towards. I mean, so there is the voices of the people who got the outcomes were not part of this, their voices were not represented.

Hema Chamraj: So this power imbalance continues. It becomes this vicious cycle, unless we stop and say, we really need to step carefully and see how we are developing AI. Are we developing AI responsibly? Is it equitable AI? Is it ethical AI? Is it based on trustworthy systems? So this is why I feel like responsible AI now is important, because as AI enthusiasts, right, we tend to get all excited, including me.

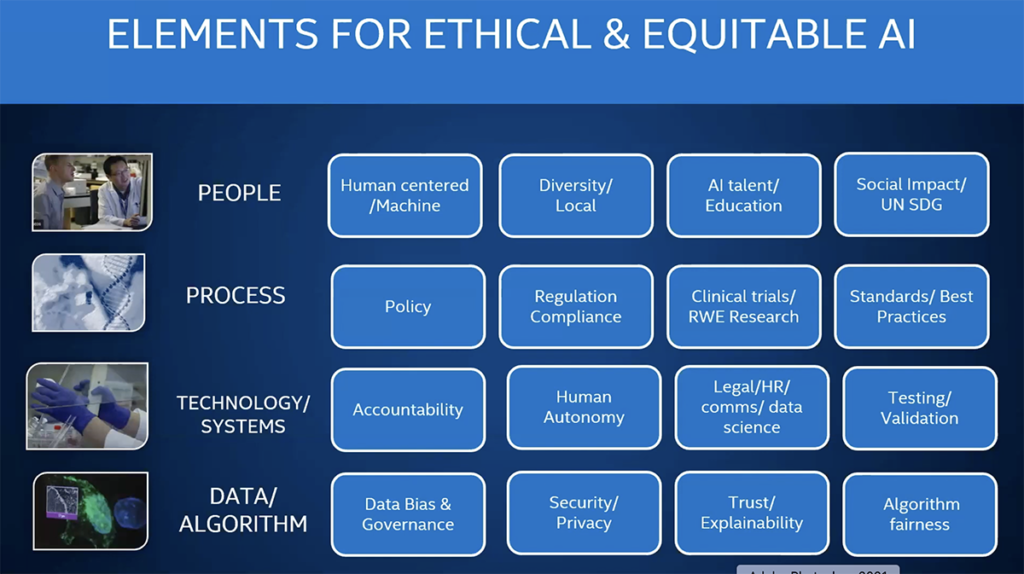

Hema Chamraj: I mean, I’m one of those people who says that we cannot do, we cannot fix the healthcare system if we don’t have things like AI, right? But I think it requires us to take a step back and see how we should be thinking about responsible AI. And there are so many things, it requires an hour on its own, but I would say we should go back to saying design AI, the design element of it is, let’s start with asking the questions.

Hema Chamraj: Why are we developing this algorithm? Who is it going to impact? How is it going to impact? What are the intended consequences or what are the unintended consequences? And then how do we remedy it? So these are the questions that we should start thinking about and start with designing AI for good and having a very comprehensive approach of the people, process, technology, systems, data, and algorithms.

Hema Chamraj: I mean, we have done some of this already, and this comprehensive approach is something that we are used to, and we need to apply that to AI because as technologists, which I am one of them, we tend to get all enthusiastic when we run towards AI. The questions we have, we ask are what is the speed, where’s the accuracy, what’s the sensitivity, what’s the specificity and the area under the curve. All of these questions are where people tend to, when they look on an algorithm to say, is it to measure the performance or the efficiency of it, right? But I think the question we need to focus on is how do we design AI for good?

Hema Chamraj: And there are many frameworks, but I’ve looked at these different lenses through which we should be looking at. Data and algorithm is very important because it’s about the data there. That’s where it manifests, AI manifests, and we have to understand the data governance. Where does the data coming from? How is it going to be used? The security, the privacy, and so on. Those are all truly very important.

Hema Chamraj: But, I would start at the beginning. At the people level, right? It has to be human centered, the human agency. And it is about not just the developers who are developing it. It has to have the voices of a diverse community of social scientists, physicians, the patients, and even the developer voices should include all of us.

Hema Chamraj: People who will have AI algorithms used upon. We need to develop the education pipeline so that we can be part of those developer voices. And so there’s a lot to be talked about here, but I would also, I would just say about the policy and the regulation, those are two areas that we are just starting out. I think we should pay more attention there because we need accountability and so on. But having said all of this are I still feel we have so much we can do together.

Hema Chamraj: We can lay this foundation for good and bring the best outcomes for all. So, that’s where I think I should be stopping. I’m getting a signal, guess I’m stopping there. Just saying that healthcare, we are all in here for healthcare. It’s not in a good state. AI can help, but we need responsible AI for ethical and equitable AI. And together we can do it.

Angie Chang: Absolutely. Thank you so much, Hema. That was an excellent talk.

Like what you see here? Our mission-aligned Girl Geek X partners are hiring!

- See open jobs at Intel AI and check out open jobs at our trusted partner companies.

- Watch all Elevate 2021 conference video replays!

- Does your company want to sponsor a Girl Geek Dinner? Talk to us!