Speakers:

Aline Lerner / CEO & Founder / interviewing.io

Gretchen DeKnikker / COO / Girl Geek X

Transcript:

Gretchen DeKnikker: Hey, everybody, welcome to our next session. Couple housekeeping notes. Make sure that you ask your Q&A at the bottom and then vote them up so that we know which ones to ask when we get to the Q&A section. The videos will be available online just after the sessions wrap up. Without further ado, I’m so excited. I’ve just met Aline and I’ve become a super fan in one phone call. It was a little crazy and so now, I stalk her.

Aline Lerner is the co-founder and CEO of Interviewing.io, which she’ll tell you a little bit about, but I think the product is pretty interesting especially for the audience here. She’s going to be talking about the art of the interview and really looking at it from the angle of not just you being the interviewer, but what are the other elements and what kind of feedback do you get? So, without further ado, welcome, Aline.

Aline Lerner: Hi, everybody. I’m also a huge fan of Gretchen’s and have been stalking her since I met her, so it’s definitely been mutual. Really excited to talk to all of you today about technical interviewing, probably through a lens that you haven’t seen before, because we have some cool data that normally you don’t get. Rather than talking about what makes someone a good interviewee, today I’m going to talk a little bit about what makes somebody a good interviewer.

I’m the CEO and co-founder of a company called Interviewing.io. We are a practice platform for technical interviewing, but we’re also a jobs platform, so if you do well in practice, you get to talk to top companies. The cool thing is everything is anonymous. We collected a ton of data and I’m going to share some of the things we’ve learned today. I’m just going to jump right in. All right. Great.

How it works — once you’re a user of our platform … this is a little bit of setup so you know where our data is coming from. Once you’re a user of our platform, you can see some time slots, grab one, and then, at go time you log in, and you get a mock interview with an engineer from a top company who is good at interviewing and good at giving feedback. After each interview, there is feedback, which you’ll see in a moment. Top performers actually get to interview with companies right on our site and those interviews are anonymous as well. After each interview, whether it’s real or whether it’s practice, there’s some metric feedback. This is the feedback form for interviewees. Hopefully, you can see some of those questions. We ask things like, how was the interviewee’s technical ability, communication ability, and problem-solving? Then, the interviewer will ask stuff like … we’ll ask the interviewer to rank the candidate on stuff like technical ability, problem-solving, and communication, and then, we’ll actually ask the candidate to rate their interviewer and this is what this talk is about.

Normally, as most of you know, an interview is one way. You don’t really get to rate your interviewer and if you do it’s a survey afterwards and it’s not right then. We ask everything from whether you want to work at this company and with this person to how excited you’d be. Then, we also ask how you think you did and that will come up at the end. Great.

We have a ton of data. We’ve done about 20,000 interviews on the platform and I’m just going to get down to brass tacks and show you feedback snippets. We try to distill a lot of signal from these and come up with a few broad categories for what the traits of good interviewers look like. This is going to be more data-driven and hopefully less about platitudes. I’m really excited to answer your questions at the end.

Before I get into the details, one thing, a lot of people think that if you work at a company with a top brand, it’s a really good crutch. If you work at a Google or a Facebook, you don’t have to be as good of an interviewer. That’s not strictly true from the data that we’ve seen. In fact, we saw no statistically significant relationship between brand strength and whether people wanted to work with employers on our site. Brand will get candidates in the door, but once they’re in the door, they’re essentially yours to lose, so keep that in mind.

The first kind of huge thing that we noticed among candidate feedback was that when you’re interviewing people, it’s important to be a human being. So, for each of these broad categories, I’m going to show you exactly what the candidates said. For instance:

- “I like the interview format, particularly how it’s primarily a discussion about cool tech as well as an honest description of the company. The discussion section is valuable and may better gauge fit. It’s nice to have a company that places value on that.”

- “Extremely kind and generous at explaining everything they do.”

When I’ve listened to some of these interviews, and I haven’t listened to all 20,000, but I’ve listened to a lot, the best interviewers are people who take the time to get to know the candidate even though interviews are anonymous. So, what are you working on? What do you want? Then, they’ll create a narrative where their company is the next logical step in that candidate’s journey. So, everything you’ve ever worked for is going to culminate in you working here.

Here’s the bad:

- “A little bit of friendly banter, even if it’s just, ‘How are you doing?’ at the beginning of the interview would probably help the candidate relax.”

- “I thought the interview was really impersonal. I could not get a good read on the goal or the mission of the company.”

Choosing the question. This is a very erudite topic of discussion, and I know everybody has opinions on what makes for a good interview question. We just have the data, so I’ll just tell you what the data said. So, here’s feedback from people that thought the question was good:

- “This is my favorite question I’ve encountered on this site. It was one of the only ones that seemed like it had actual real-life applicability and was drawn from real or potentially real business challenges.”

- “I like the question. It had a relatively simple algorithm problem and then built on top of that.”

One of the recurring themes here is that candidates are used to these generic algorithmic problems and what really gets them engaged is taking it to the next level and tying it into something that your company actually does. This is especially true if people may not have heard of what you do, or you are in a space that by default doesn’t get people excited. Anything you can do to get in the candidate’s head and ask them something interesting and then have it stick after the interview is over is going to be good. Then, candidates also feel like you put in effort.

One of the things that we’ve noticed is that whenever there’s this notion of value asymmetry in an interview, so the candidate is expected to put in work, but the interviewer is not putting in work, that’s not good. You want it to look like you’ve put in work yourself.

Here, let me show you some of the examples of bad questions:

- “This is not a good interview question. A good interview question should have more than one solution with simplified constraints.”

- “Question wasn’t straightforward and required a lot of thinking, understanding of setup.”

You don’t have a lot of time with a candidate. You want to make sure that the time that you do use is used on being able to build a connection with them and then actually seeing if they can think rather than jumping them through hoops.

“Is there any way to sharpen the image? Text is blurry.” We’ll send out these slides afterwards. Sorry about that, guys.

That’s really one of the most important things too is making sure that you are getting signal from these people in a way that’s not arbitrary. Setting up the problem to gauge whether somebody’s a thinker and a problem solver rather than catching them on arbitrary a-ha moments.

Writing a really good interview question is hard. It takes time, especially if you’re going to tie it something you do at work, it’s even harder.

One of the best tricks that I’ve seen for doing this is coming up with a shared Google Doc for your entire team or really any collaborative software. Doesn’t matter. Any time you do something at work that made you think, and it doesn’t have to be cool. The bar for whether it’s cool can be really low so you don’t have to worry about it, but any time you do something that you think was non-trivial, just throw a quick line in that doc.

Then, you can come back later and look at all the cool things your team has done and use that as a jumping off point to craft questions that are unique to you. Then, candidates will be like “You did put in the work,” and you do stick in their heads a little bit.

Asking the question itself — One of the best interviewers I ever met was a chief architect at a large software company. He used this expression that I really liked. He said that the purpose of an interview is — “can we be smart together?” That just really stuck with me, and I think that the way you ask the question can really determine whether you can be smart with somebody else or not.

Here are traits of good interviewers when it comes to asking a question:

- “He never corrected me. Instead, asked questions and for me to elaborate on areas where I was incorrect. I very much appreciate this.”

- “The questions seem very overwhelming at first, but the interviewer was good at breaking it down. I like the fact that you laid out the structure.”

- “I’m impressed by how quickly he identified the typos in my hash computation.”

Engagement is, of course, important when you’re asking the questions so you actually have the opportunity to see what it’s like to collaborate with somebody.

Another really important part of this is, and you can see this in the feedback, is layering complexity. This idea of taking a question that can start off very simply at first and then building on it. Building on it in a number of different ways and you can set up benchmarks and say, “A candidate that’s good enough is going to get through the first three portions of the question. Somebody who’s really good is going to get through four and someone who’s exceptional is going to get through five. Then, somebody who gets past that is probably going to challenge the interviewer. The sooner you can turn something into a discussion between equals and an opportunity to collaborate and problem-solve together, rather than a one-way exercise where you’re trying to see if somebody’s stupid or not, which is the worst way to interview, the better it’s going to be.

Here are some examples of poor interview feedback:

- “It was a little nerve-racking hearing you yawn while I was coding.”

- “What I found more difficult about this interview was the lack of back and forth.”

Anything you can do to engage with candidates and build on a question is going to be the best and if you can couple that with the previous point, come up with questions that are original to your company and layer complexity in ways that other people couldn’t, then you’re going to be in a very good position.

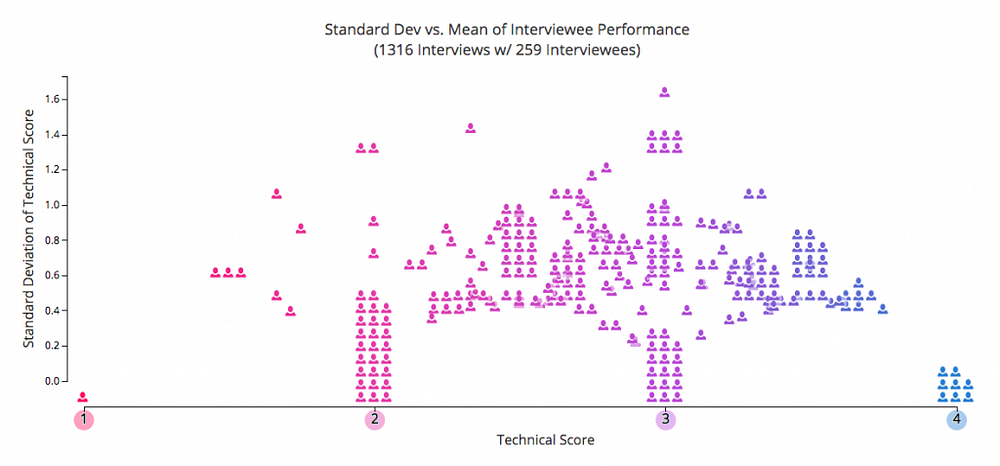

What happens after the interview? This is one of my favorite takeaways from our data, and it’s completely counter-intuitive. As you recall, we ask people how they think they did on the interview as candidates and then we also ask interviewers how the candidate actually did. We actually graphed this. The x-axis here is the actual score on a scale of one to four for somebody’s technical ability and then the y-axis is their perceived score.

As you can see, there’s quite a bit of imposter syndrome. In fact, we found that imposter syndrome plagues a disproportionate number of our users. So, what is imposter syndrome? It means that you think you did poorly when you did well. Now, here is the crazy part. If a candidate did well and they think they did poorly and you don’t give them immediate actionable feedback and let’s say you let them sit on it for days, they’re going to get into this whole self-flagellation gauntlet.

They’re going to leave that interview and then they’re going to start thinking one of two things: either they’re going to think, “Man, that company didn’t interview me well. I’m good at what I do, and I don’t think that company knew how to get it out of me, so they suck.” Even worse, what’s going to happen is you’re going to think, “Oh, I’m a piece of shit. Now, they know I’m a piece of shit, and I totally didn’t want to work there anyway.”

What ends up happening is unless you tell people they did well, immediately after they did well, you end up losing a lot of good candidates because, by the time you get back to them, they’ve completely talked themselves out of working for you.

So, don’t let this happen. Don’t let them gaze into the abyss, and give people actionable feedback as soon as possible.

Actually, I saw one of the comments. I want to leave a few moments for questions, but one of the comments on the side was “imposter syndrome is a women’s curse.” We ran some data on our platform to see if imposter syndrome is more prevalent in women or whether it’s distributed across both genders. As it turns out, both men and women are equally plagued by imposter syndrome.

The other interesting thing that we learned, and we haven’t written about this yet but we will, is that the better you are at interviewing, the more prone to imposter syndrome you are, and the worse you are, there’s the opposite called the Dunning–Kruger effect where you think you did well when you, in fact, did poorly.

Thank you, guys. I’m really excited to answer some questions. My email and Twitter are also on the slide, and I’m happy to answer them offline as well. Sorry, I saw some of you said some slides were blurry. We’ll send them out afterwards, not sure why that happened.

Gretchen DeKnikker: Great. Thank you so much. We have a few questions here and you guys still have time to submit and vote some up. So, the first question is, what do you think of take-home projects instead of whiteboard style coding interviews for those who grew to dislike them?

Aline Lerner: Yeah. I wish I had it with me. I drew this picture a while ago called the value asymmetry graph and I mentioned it in the talk as well. Value symmetry is this notion that we have two sides, both of them are putting in equal amounts of work. I think that if you’re a company with a top brand, you can get people to slog through a lot more shit than if no one’s ever heard of you. When you’re deciding as a company whether you want to use take-home challenges, that has to be one of the things you consider is, how badly do people want to work for you? If you’re Google or Facebook, at least before you get into the interview, at which point the playing field doubles a little more, people are probably going to be much more motivated to work for you than some company that just started and has no funding. You can’t always look to those companies and say, “If they use a challenge, we can too.”

If you do use a challenge, just like with interview questions, the best ones tend to be ones where it’s thoughtful and where it’s representative of the actual work because then the candidate is getting some value out of it… doing this work is going to be like the stuff I’m going to do every day, so here’s a preview.

On Interviewing.io, what we’ve seen is the customers of ours that have people do coding challenges after their technical interviews — and if those challenges take longer than an hour, the best people tend to drop out because in this market, engineers are flooded with opportunity. If you make them do work, they’re probably not going to do it unless it’s really, really interesting work or some companies pay people. If you have something that’s going take five or six hours, consider giving them a consulting fee and see if that changes anything. But, you should probably just have a really good challenge that people want to do or not have a challenge at all. That’s different for data science and engineering also. Sometimes that makes more sense. For software, you probably don’t want to do it.

Gretchen DeKnikker: I have one I want to put in because we’ve heard this a few times. We’ve been recommending it and everyone’s like, “It’s in private beta. How do we get access?”

Aline Lerner: Yeah, we’re opening it up really, really soon. So, we have a really long waiting list and we’re so excited to get through it. For now, we’ve been favoring people that are still in the U.S. because it means that we can place them a little more easily. We don’t always look at it, but in tie situations, we’ll potentially look at someone’s years of experience because we have more job openings for senior folks than junior ones. Regardless, we are working on a way to open it up and I expect it will be opened up by next quarter. So, everyone that’s on the wait list, I’m really sorry and if you have job interviews coming up soon, send me an email and we’ll see what we can do.

Gretchen DeKnikker: Yeah. Awesome. Yeah. It’s a great problem to have though, right?

Aline Lerner: Well, we really want to move on from that and just open it up, but yes..

Gretchen DeKnikker: Next question is, I’m unfamiliar with it but very interested. How anonymous is anonymous, first names, voices? Does that anonymity help level the playing field for women and people of color?

Aline Lerner: Yep. When we say anonymous, we mean truly anonymous. Everybody gets a handle. So, my handle on the platform is nihilistic defenestration. If you ever run into … I think I’m the only one that has that. IF you run into that, it’s me. It’s why I quote Nietzsche and wear black, but, in some cases …

For practice interviews, you can hear people’s voices. From voice, you could potentially glean gender and we don’t mask accents. However, we did just two days ago, we just got a patent on real-time voice masking. In real-time, we can make women sound like men or men sound like women or make everyone sound androgynous. If a company wants to use that for their interviews, then they have to turn it on across the board. If you let candidates decide, then there’s this other bias notion, who turns it on and who doesn’t. This way it’s turned on for everyone and we leave that at our customer’s discretion.

We did try making everybody change genders in practice to see what effect that would have and we found that surprisingly, at least to me, it didn’t really change how people did. So, women didn’t do better when they sounded like men and men didn’t do better when they sounded like women. We did notice that women were doing a little worse across the entire platform and I was confused by that ’cause I don’t think women are worse at computers.

What ended up being the case was that women were disproportionately quitting the platform after one bad performance in practice. Once you corrected for people that were quitting after one bad performance, well, the gender-based disparity went away entirely. So, we’re actually rerunning that data now that we have a lot more interviews and we’ll report back. Back then, we had a lot fewer, so all of that … As if the case with science, or in our case, pseudo-science, the stuff can be overturned.

Gretchen DeKnikker: All right. I’m going to do one more. We have 1,000 questions. I think we could mostly stay on this topic all day. You do have a blog, right?

Aline Lerner: Yeah.

Gretchen DeKnikker: So, if you want more from Aline, check out the blog on Interviewing.io because she’s-

Aline Lerner: I’ll put it in the chat.

Gretchen DeKnikker: Perfect. Yeah. There’s a ton of information. So, I’m going to do one more. You touched on this a little bit, did any data, anecdotal or otherwise, bubble up around bias? For example, knowledge of algorithms, which can indicate recency of learning, younger candidates or those who got computer science degrees versus coming from alternative backgrounds?

Aline Lerner: Oh, God, yes. There’s so much bias. The most compelling bias or, I guess, the strongest signal of bias that we’ve seen has been against people with non-traditional educational and work backgrounds. If you didn’t go to a top school and you didn’t work at a top company, it’s going to be really, really hard for you to get in the door. What we’ve seen repeatedly, and this is the thing that blows my mind, is with some of the bigger customers that we have where they get a lot of inbound applications, people have applied, they’ve gotten rejected at the resume screen, so before anybody ever interviewed them, and then they came in … Then, they used our platform, practiced and got good enough to … or, in many cases, they were already good enough, but they got access to our employer portal, interviewed with those companies, and actually got hired.

Of course, once they unmasked after their interview, the recruiting team can see, “Oh, shit. This person is in our ETS but we rejected them six months ago before anyone talked to them. Oh, shit. There’s something wrong here.” In fact, 40% of the hires we’ve made in the last two years have been people that would have been [inaudible 00:21:03]. Companies admitted, they’re like, “Well, I never would have … What the Hell?” That’s why we insist interviews be anonymous, or they actually had been turned away by that employer.

Gretchen DeKnikker: All right. Well, I would love to stay doing this. Thank you so much for coming in and giving this talk. I think it was hugely valuable. Everybody, we’re going to take a short break. We will be back in 15 minutes, so go grab a snack and some coffee and we’ll see you at 1:20. Bye.

Aline Lerner: Bye, everybody. Thank you for having me.

Gretchen DeKnikker: Thank you.